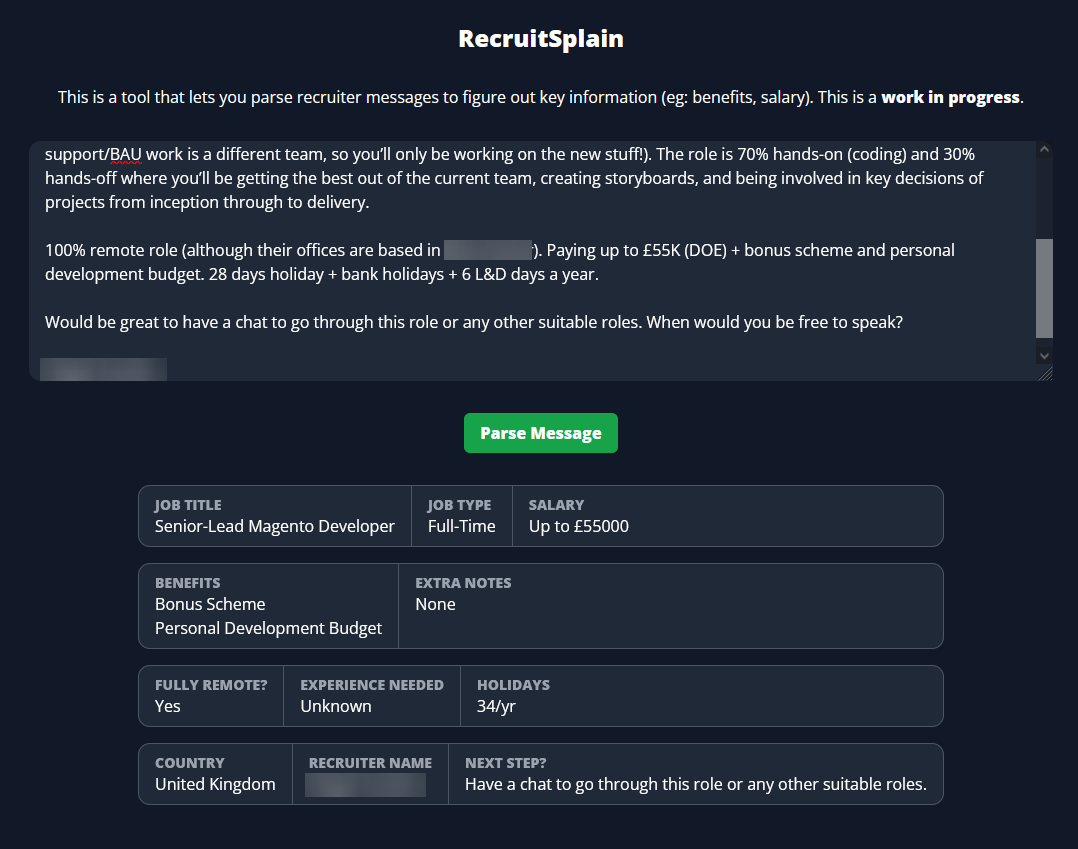

tl;dr - I made a tool that parses messages from LinkedIn, tells me what the key bits of information are. You can try it yourself at RecruitSplain.com!

Recruiters on LinkedIn can be a bit frustrating to deal with. They tend to add a lot of noise in their messages and are usually typically vague because they "want to find the right candidate".

I get that, but I'm not a huge fan of it. I also don't like having to constantly send over my CV, join them in endless 2 minute calls that could have been messages, and have to jump through hoop after hoop to see if a position is even compatible with me.

A few years ago, I had a great idea: I was going to automate this! It would have saved me a lot of time and hassle, but I quickly realised that it was going to be next to impossible for a few reasons:

- LinkedIn does not (really) let you integrate with their messages. Microsoft currently states that it's available, only for select partners (which I doubt would ever include me, unfortunately);

- It's probably not regex-able. When you are lucky enough to receive salary information in the first message, it'll come in so many different formats that making a regex to try and parse out the salary information would incur the wrath of the underworld;

- There's actually (sometimes) a good amount of information you might want to consider - flexible hours, fully remote working, profit sharing schemes, company reputation etc which may actually influence your decision

OK - so, it doesn't seem like a viable side project. Why am I writing about it now?

A few weeks ago I made (and am still fixing bugs for...) Chess vs. GPT, a simple webapp that lets you play chess vs ChatGPT. It was inspired from a hilarious match of ChatGPT vs Stockfish (the current leading chess engine) and included a whole lot of moves from ChatGPT that simply were not legal in the slightest. Ever since then, I've been looking at interesting (but niche) applications to try and apply ChatGPT.

Yesterday, an idea popped in to my head: what about recruiter messages? Can ChatGPT reliably handle them?

It turns out, it can (kinda).

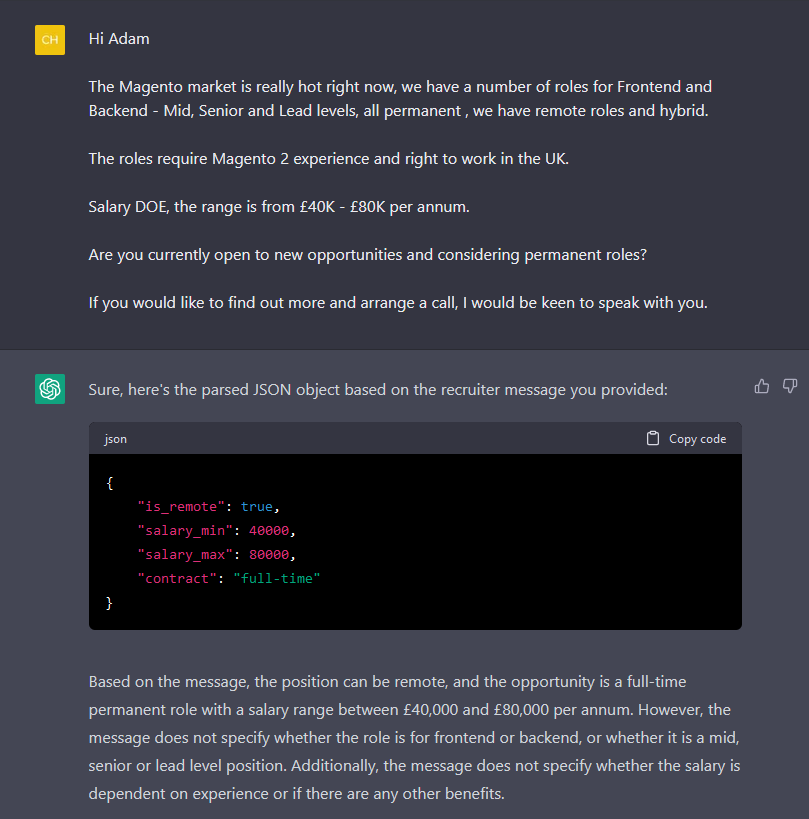

My first prompt was simple:

You are a parser that takes in recruiter messages and converts them in to a JSON object with the following parameters:

- `is_remote` - whether or not the opportunity is remote

- `salary_min` - the minimum salary

- `salary_max` - the maximum salary

- `contract` - whether or not the position is a contract/freelance position, or a full-time role

Please respond to further messages with only the parsed JSON object. If you can't find the information, set the parameter to `NULL`.To my surprise, it (mostly) worked!

This is quite exciting, because it just works. There were a few bugs, however:

- I expected

contractto be a boolean value (ie:falsefor full time work,truefor contract/freelance); - ChatGPT is outputting a lot of prose outside of the JSON object - some of it is actually quite useful - but would not be great for an API response

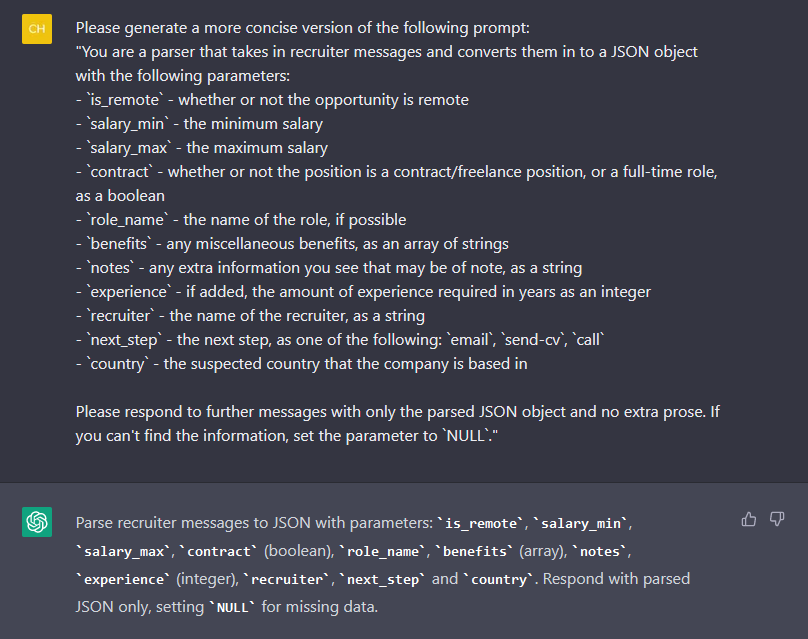

Tuning a ChatGPT prompt

Tuning a ChatGPT prompt is a bit weird as a programmer, because you need to be able to describe what you want it to do, rather than implementing it. As a result, there are some liberties you may take as a programmer (the contract field being a boolean, for instance) that aren't immediately obvious otherwise.

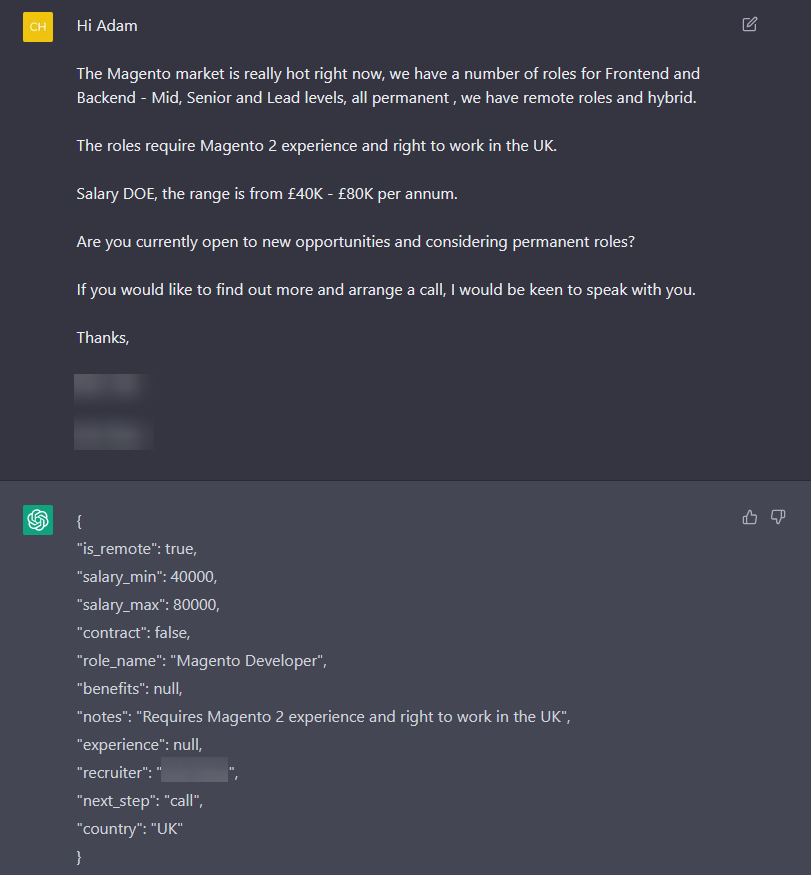

So, I went back to the drawing board with some new requirements:

- I wanted ChatGPT to try and determine if any additional benefits were mentioned in the message, such as flexible working, profit share schemes etc.

- I wanted to add in some extra data, as well, such as the suspected country, any experience required, the name of the recruiter, and the "next step" to proceed;

- I wanted to remove the prose and have ChatGPT return it as a new parameter

After some tinkering, I came up with a new prompt:

You are a parser that takes in recruiter messages and converts them in to a JSON object with the following parameters:

- `is_remote` - whether or not the opportunity is remote

- `salary_min` - the minimum salary

- `salary_max` - the maximum salary

- `contract` - whether or not the position is a contract/freelance position, or a full-time role, as a boolean

- `role_name` - the name of the role, if possible

- `benefits` - any miscellaneous benefits, as an array of strings

- `notes` - any extra information you see that may be of note, as a string

- `experience` - if added, the amount of experience required in years as an integer

- `recruiter` - the name of the recruiter, as a string

Please respond to further messages with only the parsed JSON object and no extra prose. If you can't find the information, set the parameter to `NULL`.

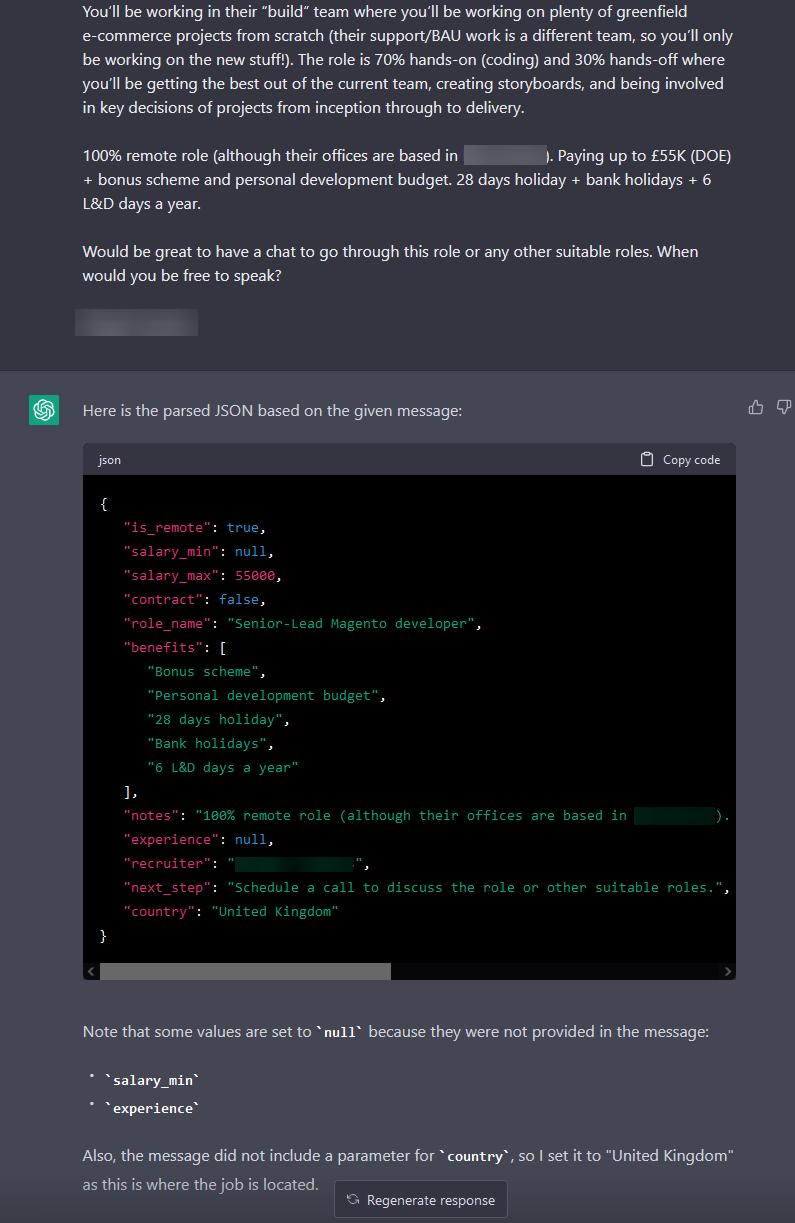

Testing a ChatGPT prompt

OK - so I had something that worked for some messages, but will it work for a wider range of them?

I started looking through a bunch of my old messages, looking for ones which might be particularly difficult to parse out, and by and large it really did just work!

(would it be possible to make a ChatGPT prompt to validate results from another prompt? Tune in next time...)

The funky part: creating a (web)app

I decided that I wanted to turn this in to a (basic) webapp - maybe it'll help people out!

I decided on the name RecruitSplain (because it 'splains recruiters), registered a domain, and got to work!

Optimising the Prompt

OpenAI provides an API to interface with GPT-3.5 (the current version of GPT used by ChatGPT) for a low-ish cost. The issue, however, is scaling. OpenAI charges $0.002/1,000 "tokens" which includes your starting prompt.

Our existing prompt is quite verbose, so how can we minimise the cost?

Funnily enough, ChatGPT can do that for us too!

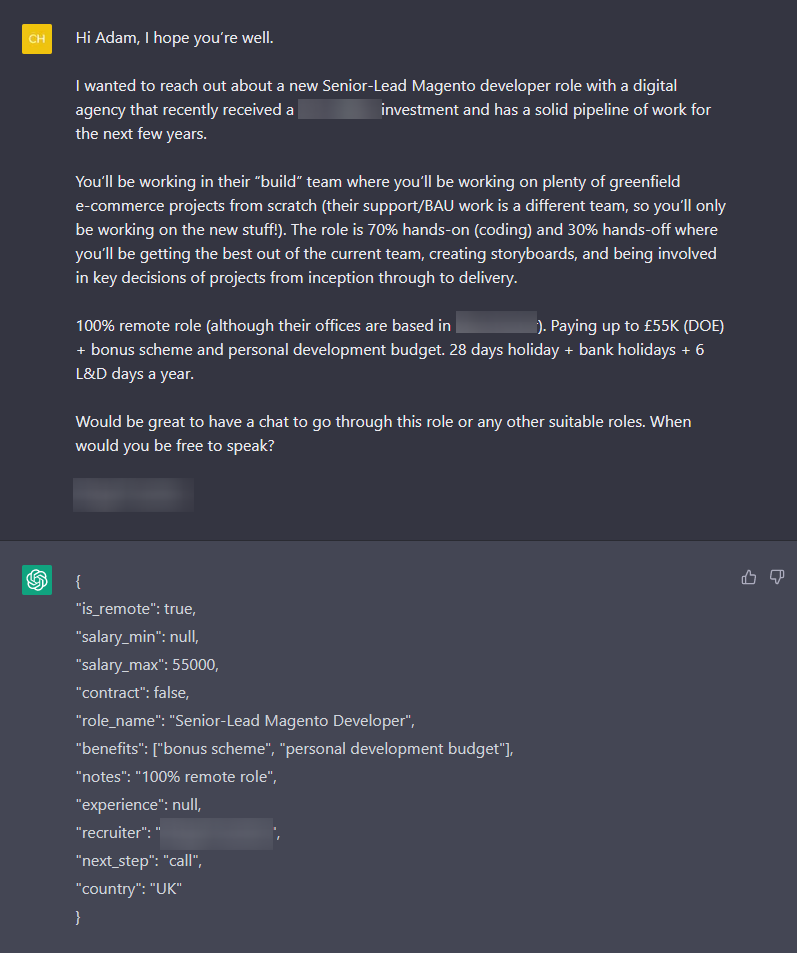

Interestingly, using the "optimised" prompt actually produces different results.

Using the "complicated" example before, it actually adds extra details in to the benefits field! My hypothesis for this is a mix of two factors:

- The "optimised" prompt is a lot shorter, which means that the models' "short term memory" may be better used;

- The "optimised" prompt omits a lot of information which may otherwise describe hidden constraints

With that being said - the prompt is (mostly) working just fine, so a few tweaks and we'll be good to go!

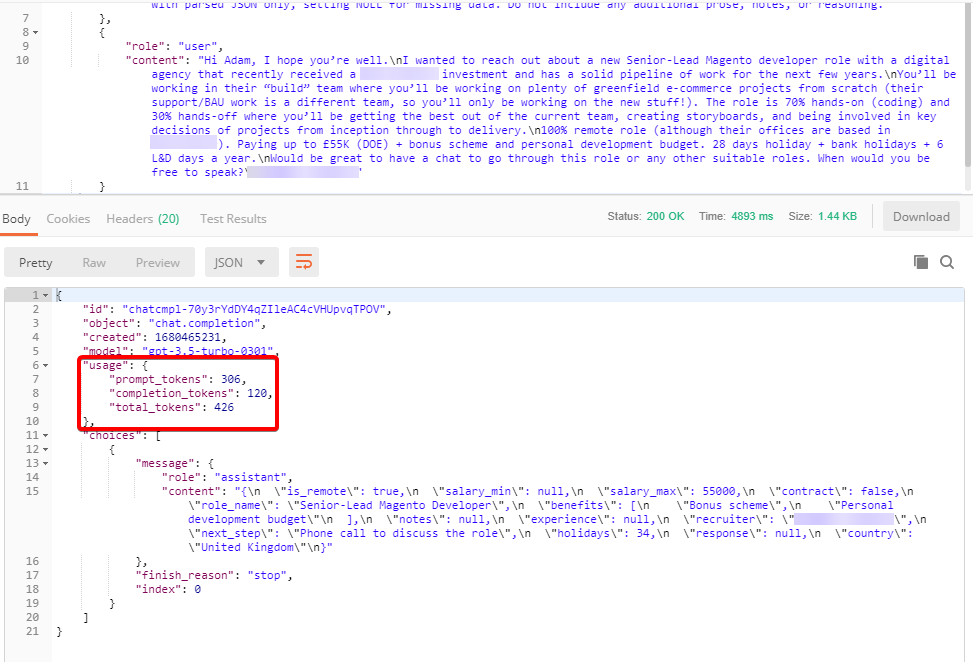

Using ChatGPT's API

Using the API is really simple. Once you've got your API key, you need to start by creating the JSON to use.

For our example, it'll look something like:

{

"model": "gpt-3.5-turbo",

"messages": [

{

"role": "system",

"content": "Parse recruiter messages to JSON with parameters: is_remote, salary_min, salary_max, contract (boolean), role_name, benefits (array), notes, experience (integer), recruiter, next_step, holidays (integer), response, and country. Respond with parsed JSON only, setting NULL for missing data. Do not include any additional prose, notes, or reasoning."

},

{

"role": "user",

"content": "<MESSAGE FROM USER>"

}

]

}After that, we just POST that over to https://api.openai.com/v1/chat/completions (with our API key!) and we have our response under .choices[0].message in the response object.

Using Postman to emulate this, we can even see how many tokens we're using - the majority of which are going to come from the user message!

Creating a webapp

At this point, we have everything we need - except an app! I'm actually going to briefly skim over this part, because there is no "one size fits all" for this part!

For me, I'll be using:

- Cloudflare Workers (to proxy API requests)

- SvelteKit (frontend) + Tailwind (styling)

- Cloudflare Pages (to deploy the app)

Note: I could use CF Pages for all parts of the app, but this is just my preferred way

Initial set up

npm create svelte@latest chatgpt-app

cd chatgpt-app

npm install

npm i -d tailwindcss svelte-preprocess postcss autoprefixer @sveltejs/adapter-cloudflare

npm run devYou'll also need to set adapter in svelte.config.js to @sveltejs/adapter-cloudflare, and create a tailwind.config.cjs and postcss.config.cjs files:

// tailwind.config.cjs

/** @type {import('tailwindcss').Config} */

module.exports = {

content: ['./src/**/*.{html,js,svelte,ts}'],

theme: {

extend: {

spacing: {

'128': '32rem'

},

fontFamily: {

'sans': [ 'Open Sans', 'Arial', 'sans-serif' ]

}

},

},

plugins: [],

}

// postcss.config.cjs

module.exports = {

plugins: {

tailwindcss: {},

autoprefixer: {},

},

}Lastly, we'll need to create the file src/app.css and include it in src/routes/+page.svelte:

// src/app.css

@import url('https://fonts.googleapis.com/css2?family=Open+Sans:wght@400;800&display=swap');

@tailwind base;

@tailwind components;

@tailwind utilities;

// src/routes/+page.svelte

<script>

import '../app.css';

</script>Boom - we're done with the boilerplate!

Worker Script

The worker script is super simple. Create a new worker, name it whatever you want, and proxy the request like so:

export default {

async fetch(request, env) {

const API_KEY = '<KEY FROM OPENAI>';

let messages = await request.json();

const response = await fetch('https://api.openai.com/v1/chat/completions', {

method: 'POST',

headers: {

'Content-Type': 'application/json',

'Authorization': `Bearer ${API_KEY}`

},

body: JSON.stringify({

model: 'gpt-3.5-turbo',

messages

});

});

let final = await response.json();

return new Response(JSON.stringify(final));

}

}Implementing rate limiting, CORS headers, validations, etc are left as an exercise to the user

Actually building a webapp

We now have almost everything we need! In this example, we'll just take in the message from a textbox and, when pressing a "Parse Message" button will send the data to our API.

Again - just glossing over this as it's really simple:

<script>

import '../app.css';

async function askGpt(prompt) {

let payload = [

{

role: 'system',

content: '<CHATGPT PROMPT>'

},

{

role: 'user',

content: prompt

}

];

let res = await fetch(`https://my.api.com/`, {

method: 'POST',

headers: {

'Content-Type': 'application/json'

},

body: JSON.stringify(payload)

});

if (res.ok) {

res = await res.json();

if (res.hasOwnProperty('choices')) {

return JSON.parse(res.choices[0].message.content);

}

}

return false;

}

let textareaContent;

</script>

<textarea bind:value={textareaContent}></textarea>

<button on:click={() => askGpt(textareaContent)}>Parse Message</button>...and that's it! I'm omitting a lot of the "clutter" which would include styling and any other funky behaviour you may want to add. This isn't a step by step guide - just an example!

The final app

If you want to use the app yourself - either to test it or to just play around with it, then visit RecruitSplain.com! If you find anything that breaks with it - then let me know!